I am still working on the Kubernetes stack behind my personal website whenever I have some free time.

The goal is still the same – to build my own personal Kubernetes-powered data science/machine learning production deployment stack (And yes, I know about Kubeflow/AWS Sagemaker/Databricks/etc).

However my key objective now lies not with finding out whether using Kubernetes will save maintenance efforts (short answer – it does not save much maintenance efforts at a small scale), but with seeing how a best practice end state Kubernetes stack will look like and the effort needed to get there.

So what have I been up to? Some of my time in this period has been spent on fixing minor issues that were not noticed during the initial deployments.

Example 1, my WordPress pod is losing my custom theme every time the pod is restarted. Why is that? It is because the persistent volume seems to get overwritten each time by the Bitnami WordPress helm chart that I am using. The solution? I implemented a custom init container that repopulates the Wordress root directory by pulling a backup from S3.

Example 2, a subset of my pods have been crashing regularly due to a node becoming unhealthy. Why is that? It is because my custom Airflow and Dash containers seem to have unknown memory leaks, leading to resource starvation on the node causing pods to be evicted. The solution? I manually set up custom resource requests and limits for all Kubernetes containers after monitoring their typical utilisations. (I have been putting off doing this for a while thinking I could get by fine, but this incident has proven that I am wrong.)

The majority of my time has been spent on setting up proper (1) secret management (using Hashicorp Vault + External Secrets Operator) and (2) monitoring (using Prometheus + Grafana) stacks.

On secret management. Hashicorp Vault + External Secrets Operator have been relatively easy to use, with well-constructed and documented helm charts.

The concept behind Hashicorp Vault is relatively easy to understand (i.e. think of it like a password manager). The key trap for any beginner will be the sealing/unsealing part. The vault needs to be unsealed with a root token and a number of unseal keys for it to be functional. But if the vault instance ever gets restarted, the vault will become sealed and no one will be able to read the secrets (i.e. passwords) stored in it.

A sealed vault needs to be manually unsealed unless you have auto-unseal implemented. However implementing auto-unseal needs another secured key/secret management platform, which turns this problem into an iterative loop. This is one area that I feel is better solved with a managed solution (which unfortunately DigitalOcean does not have at the moment).

External Secrets Operator (ESO) works great, but it does take some time to understand the underlying concept. In short, Vault <- SecretStore <- ExternalSecret <- Secret. To get a secret automatically created, one needs to specify ExternalSecret (which tells ESO which secret to retrieve and create) and SecretStore (which tells ESO where and how to access the vault). The key beginner trap here will be the creation and deletion policy. If not set properly, secrets may be automatically deleted due to garbage collection, leading to services in Kubernetes going down (since most services rely on secrets in one form or another).

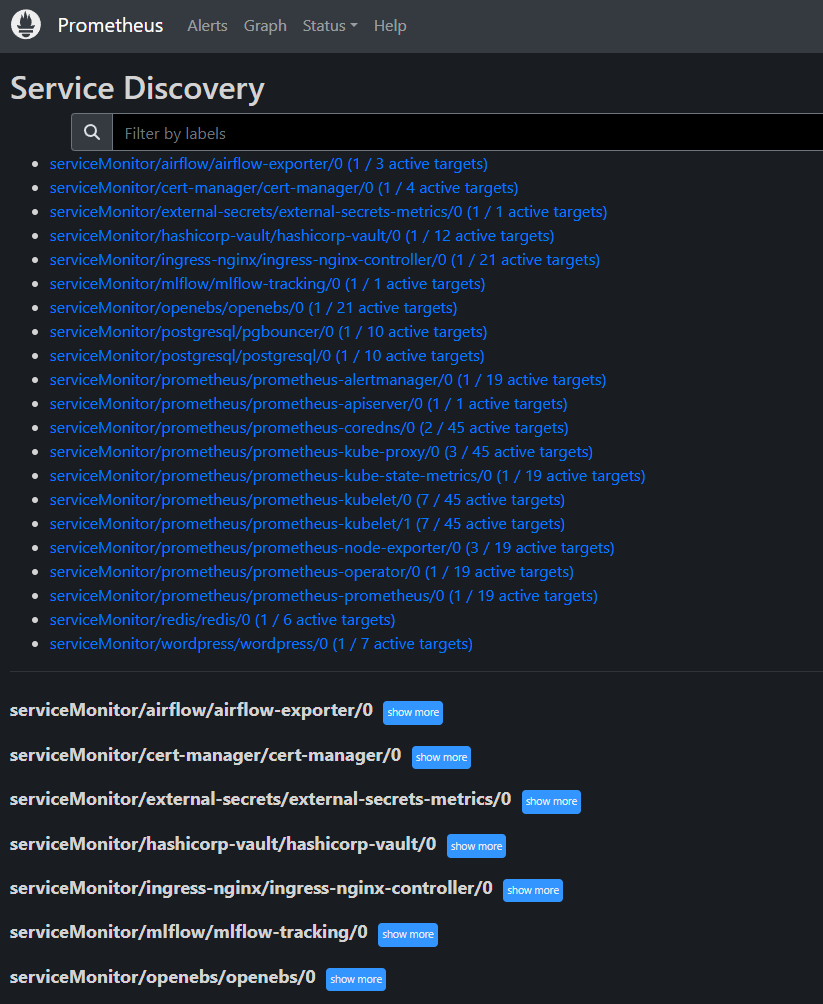

On monitoring. Prometheus is a very well-established and documented tool, so setting it up with a helm chart is a breeze (in fact there are so many Prometheus helm chart implementations that you can definitely pick one that suits your needs). One of the ways Prometheus works in short is, Prometheus -> Prometheus operator -> Service Monitor -> Service -> Exporter -> Pod/Container to be monitored. The key beginner trap here is to think of Prometheus as just another service, but it is in fact a stack of services. The first time the Prometheus pods were spun up after installation, my nodes were fully filled with two Prometheus pods unable to be scheduled.

The complexity of Prometheus comes from the sheer number of services, i.e. main Prometheus, Prometheus operator, alert manager and many many different types of exporters. While most services/helm charts have great support for Prometheus (i.e. already exposing metrics in Prometheus format), the challenge lies in getting these metrics to Prometheus as more often than not you need an exporter. The exporter can run centrally (e.g. kube-state-metrics exporter), run on each node (e.g. node exporter), or most often run as a sidecar as part of the pods (e.g. apache exporter for WordPress, flask exporter for Flask, postgres exporter for Postgres). Configuring all these exporters to make metrics visible in Prometheus is not hard, but definitely laborious.

For now, I have managed to get all metrics fed into Prometheus, except for Dash that I could not find a pre-built exporter for. The next steps will be to spin up Grafana so that I can better visualise the metrics and set up some key alerting rules. With this, hopefully I can avoid having my Dash instances stuck in a crash loop due to missing secrets for one month without me knowing about it.

After getting Prometheus + Grafana up and running, Loki the log aggregation system will be next. However the number of services that come with Loki does scare me as well.