About 2 months ago, I started migrating my entire personal stack onto Kubernetes from regular virtual servers.

So what has happened in the meantime? Have I freed up more operation maintenance time to do more interesting data science development work yet?

Unfortunately the answer is no, at least for now.

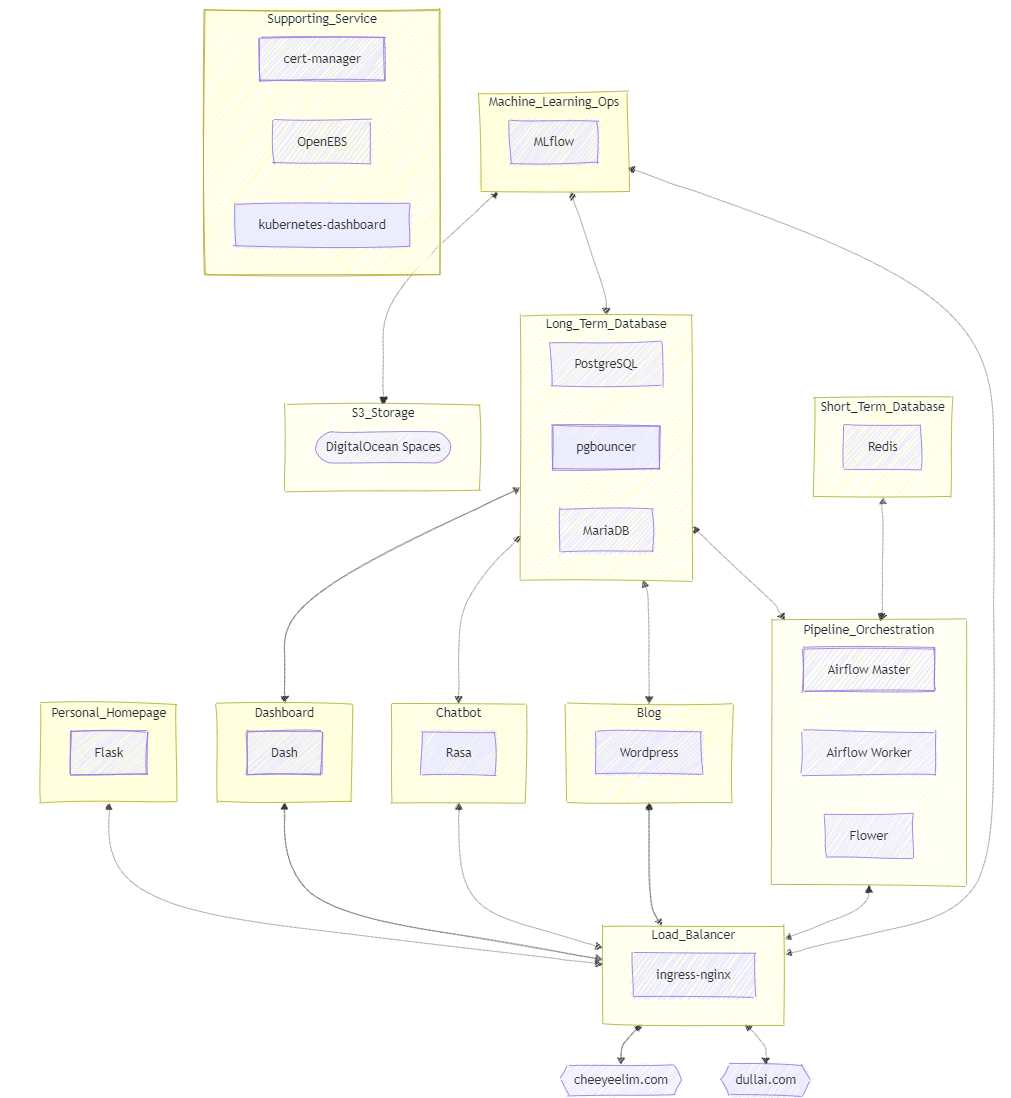

It turns out that migrating Airflow and MLflow onto Kubernetes is harder than I thought. This is because both of these tools require multiple backend services to run smoothly, including a relational database (where PostgreSQL is used) and an in-memory database (where Redis is used).

Previously to speed up my development progress, I had been using managed instances of PostgreSQL and Redis offered by DigitalOcean. They are extremely easy to set up and I was able to start using them within minutes.

However I eventually ran into weird runtime issues in Airflow and MLflow that ultimately boiled down to specific configuration issues within PostgreSQL and Redis. While managed services are easier to get started, debugging and customising them are typically harder due to restricted access to certain logs and backend configurations.

So I told myself, if I can work with managed PostgreSQL and Redis, how hard would it be to self-host them directly in Kubernetes, which would give me the freedom to customise them to work with Airflow and MLflow as needed?

Or so I thought.

I spent the next few days on properly exposing PostgreSQL and Redis ports via ingress-nginx, then another few days on setting up pgbouncer connection pooling for PostgreSQL, then another few days on setting up Airflow environment to work with custom DAG package, then another few days on making sure all services are interacting correctly with the new self-hosted PostgreSQL and Redis instances.

After many “few more days” than I expected, my entire personal stack is finally fully migrated onto Kubernetes (components as shown in the attached diagram).

So what’s next you asked? Is the platform all set and ready to go?

Not yet, unfortunately. To make sure the Kubernetes-based platform can survive for longer with minimal maintenance, I will be setting up proper secret management, monitoring solution and CI/CD integration next.

Another “few more days” to go eh?