With the ending of June, it is now the halfway point of M6 competition. It may be a good time to do a quick review of my progress and learnings from the M6 competition so far.

(And also to get me into the habits of regularly writing blogs!)

Progress in M6 competition

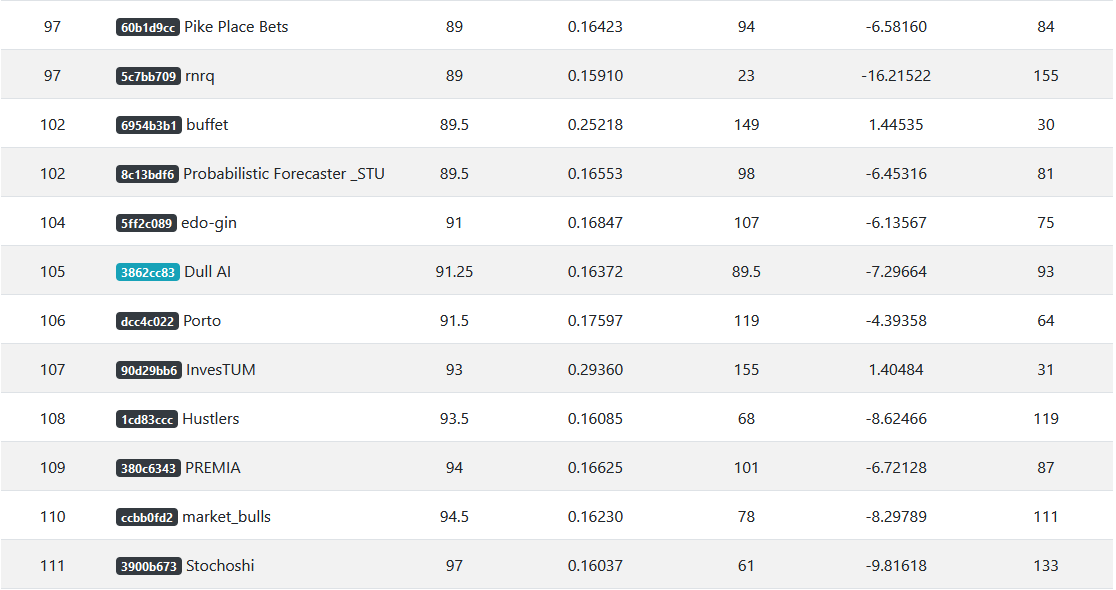

For a brief period at the beginning of M6 competition, I was among the top 20 on the leaderboard (overall rank). But ever since then, I have been languishing between rank 80-110.

I tried a few ways to improve the results (e.g. adding unit testing, expanding security universe), however the results either (1) did not improve, or (2) I lack the time/energy to fully implement them.

As of now, I still have 24 items/ideas on my to-do list to be tested or implemented to improve my solution for M6 competition!

That being said, I would still say that I have achieved my original goal, which is to use M6 competition as a motivator to build an investment pipeline (including automated data retrieval, forecasting and portfolio optimisation).

If you are interested in my exact methodology, perhaps as a counter-example of what not to do, I will share it once the competition is over.

Learnings from M6 competition

1. Getting access to required investment data is hard

Before you jump in and mention that everyone can easily get free price data from Yahoo Finance or other similar sources, I just want to say that I agree with you.

But getting access to price data is the first step. Typically you will also want to be able to screen for securities to create your investable universe. And this screening requires non-direct price data, e.g. market cap and valuation metrics. The problem becomes even tougher if you intend to create a cross-country/cross-exchange universe.

Assuming that you got access to a screening capability (the easiest way is to buy it from a provider), the next step is to build a stable (ideally automated) connection to a chosen data provider. This step can be tricky, as it depends on how much you are willing to pay for a data service, and this roughly correlates with how stable the provided data API will be.

Don’t even start thinking about getting data from multiple providers to be merged together. Just trying to get the data index (in this case, tickers or other security identifiers) to align will make you crazy if this is not part of your full-time job).

Lastly, once you have all these in place, there remains the question of data quality. I briefly tested adjusted OHLC EOD price data from a few retail investor-friendly data sources (i.e. annual subscription price of less than 4 digits).

My rough conclusions are:

- Yahoo Finance

- Pretty good pricing data that agrees with direct data from exchanges.

- But often has random outlier spikes (e.g. 100x price on a single day).

- Possible to get some fundamental data as well, but the API is very unstable.

- Free (but in grey area).

- Interactive Brokers

- Very good pricing data for recent dates.

- But historical adjusted prices are systematically off. Perhaps due to a different adjustment calculation method.

- Requires an IB account and a running instance of TWS to get data.

- Very cheap subscription price. Single digit per month to get all US pricing data.

- EOD Historical

- Just started testing it out, as it was also used by M6 competition to calculate rankings.

- No comment on data quality yet, as I have yet to test them.

- Very user-friendly API and reasonable pricing.

2. Forecasting prices is hard (really hard)

As with doing any kind of predictive modelling, forecasting stock prices are hard. Any type of price is hard to forecast, because there is often no ground truth to a price and the relationships between factors affecting a price change often.

A price is a reflection of not the intrinsic value of an item most of the time, but a reflection of how much someone is willing to pay for it at that moment in time.

With this in mind, I have a feeling (just a hypothesis that I have not yet checked out) that an approach that tries to model prices (or other price proxies) as point estimates are very unlikely to work out. A probabilistic approach seems to be the best bet, but it makes the computation and interpretation of the results (e.g. how to trade on the estimates) more difficult. Plus this approach also falls slightly out of my knowledge domain.

The difficulty of this problem can be seen by the huge fluctuation in the ranking on the leaderboard as well. A +/- 20 position move from month to month is not a rare occurrence. Although this may also be due to the (1) current ultra-volatile stock/economic environment and (2) changes in users’ forecasting methods across submissions.

This is a rather long competition that lasts for one year. But I have the feeling that for a stock market forecasting competition, it may need to run for 2-5 years to filter out the methods that are winning just due to chance. Then only we can see who is truly swimming naked. (Disclaimer: I am not implying that my method can stand the test of time, because I don’t think it can.)

3. Setting up trading strategy and portfolio allocation is also hard

This is another huge topic by itself, and often is rather distinct from the stock price forecasting problem. As mentioned by Prof. Makridakis (the M6 competition organiser) in one of his LinkedIn posts, there is not a strong correlation between accurate forecasting and good investment return.

As I have a rather basic understanding of how to build a profitable trading strategy and portfolio allocation, I am not able to comment much here. But I would say that in the absence of strong convictions, buying the market is not a bad idea in general.

4. Clean code/solution structuring helps

For most M6 participants, focusing on code/solution structuring is perhaps among the last thing they would do (or so I guess, please correct me if I am wrong). What I mean by code/solution structuring is ensuring that various parts of the codes (e.g. data processing, forecasting, portfolio optimisation) are written and structured according to software engineering best practices.

For me, this is the part that I spent the most time on. I know that some of you will be laughing at me because you think I deserve to rank near the bottom due to this (again I agree with you). But I truly enjoyed the time that I took to (1) structure my codes to follow the Python package cookiecutter template, (2) incorporate CI/CD practices (e.g. using Git, pre-commit), and (3) write clean codes with proper linting and docstrings.

As I work on the codebase on a part-time basis, having a clean code structure has enabled me to easily dive back into parts of the codebase. It reduces the time I need to figure out how my codes all link together, and hopefully can ensure that my codes are reusable if I do decide to repurpose them for something else.

Ending

That’s all for my midpoint review. I will continue to participate in M6 competition by making submissions, but I doubt I will have the time/energy to reverse the tide. Either way, I got a lot out of this competition already.

If you have read through my lengthy post, hope you gained some useful insights (or at least had a fun read)!